Qwen-2-5-VL Image and Video Recognition DEMO

1. Introduction

Qwen-2-5-VL is a multimodal large language model (MLLM) launched by the Alibaba Tongyi Qianwen team. It is part of the Qwen-2 series of models and supports multimodal interaction between vision and language.

1. Features

- Dynamic resolution processing: Adaptively adjust image resolution to balance accuracy and computational efficiency.

- Cross-modal alignment: Aligning visual-linguistic feature spaces through contrastive learning to improve modal interaction capabilities.

- Low resource adaptation: supports lightweight deployment solutions such as quantization and LoRA fine-tuning.

2. Project Directory

Qwen2-5-VL

├── datasets

│ ├──images # By default, there is an image named panda.jpg

│ └──videos # By default, there is a video named carvana_video.mp4

├── models

│ └── BM1684X

│ └── qwen2.5-vl-3b_bm1684x_w4bf16_seq2048.bmodel # BM1684X qwen2.5-vl-3b model

├── python

│ ├── __pycache__

│ ├── configs # Configuration files

│ ├── qwen2_5_vl.py # Startup program

│ ├── README.md # Instruction document

│ ├── vision_process.py # Visual data preprocessing file

│ └── requirements.txt # Python dependencies

├── scripts

│ ├── compile.sh

│ ├── datasets.zip

│ ├── download_bm1684x_bmodel.sh # Download script for the BM1684X box model

│ ├── download_bm1688_bmodel.sh # Download script for the BM1688 box model

│ └── download_datasets.sh # Dataset download script

└── tools # Toolkit2. Operation steps

1. Prepare Python environment, data and model

1.1 First upgrade the python version to 3.10

sudo add-apt-repository ppa:deadsnakes/ppa

sudo apt update

sudo apt install python3.10 python3.10-dev

# Create a virtual environment (without pip packages). Each time you run it in the future, you need to switch to the virtual environment according to the steps.

cd /data

# Create a virtual environment (without pip)

python3.10 -m venv --without-pip myenv

# Enter the virtual environment

source myenv/bin/activate

# Manually install pip

curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py

python get-pip.py

rm get-pip.py

# Install dependent libraries

pip3 install torchvision pillow qwen_vl_utils transformers --upgrade1.2 Copy the official Qwen2-5-VL project directory of Suanneng (or copy and upload Qwen2_5-VL to /data in the box)

git clone https://github.com/sophgo/sophon-demo.git

cd sophon-demo/sample/Qwen2_5-VL

cd /data/Qwen2_5-VL ##If only the LLM_api_server has been uploaded, you only need to operate within this directory.1.3 Preparing the operating environment

No need to modify the memory on PCIe, the following is related to the soc mode: For 1684X series devices (such as SE7/SM7), the environment preparation can be completed in this way to meet the Qwen2.5-VL operating conditions. First, make sure to use V24.04.01 SDK. You can check the SDK version through the bm_version command. If you need to upgrade, you can get the v24.04.01 version SDK from sophgo.com. The flash package is located in sophon-img-xxx/sdcard.tgz. Refer to the corresponding product manual for flashing.

After ensuring the SDK version, in the 1684x SoC environment, refer to the following command to modify the device memory

cd /data/

mkdir memedit && cd memedit

wget -nd https://sophon-file.sophon.cn/sophon-prod-s3/drive/23/09/11/13/DeviceMemoryModificationKit.tgz

tar xvf DeviceMemoryModificationKit.tgz

cd DeviceMemoryModificationKit

tar xvf memory_edit_{vx.x}.tar.xz #vx.x is the version number

cd memory_edit

./memory_edit.sh -p #This command will print the current memory layout information.

./memory_edit.sh -c -npu 7615 -vpu 2048 -vpp 2048 #If it is on the 1688 platform, please modify it to: ./memory_edit.sh -c -npu 10240 -vpu 0 -vpp 3072

sudo cp /data/memedit/DeviceMemoryModificationKit/memory_edit/emmcboot.itb /boot/emmcboot.itb && sync

sudo reboot1.4 Install unzip and prepare test data set

sudo apt install unzip

chmod -R +x scripts/

./scripts/download_bm1684x_bmodel.sh ##Download the model file

./scripts/download_datasets.sh ##Download the dataset2. Python routine

2.1 Environmental Preparation

# Additionally, you may need to install other libraries

cd /data/Qwen2_5-VL/python

pip3 install dfss -i https://pypi.tuna.tsinghua.edu.cn/simple --upgrade

pip3 install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple

# You need to install SILK2.Tools.logger

python3 -m dfss --url=open@sophgo.com:tools/silk2/silk2.tools.logger-1.0.2-py3-none-any.whl

pip3 install silk2.tools.logger-1.0.2-py3-none-any.whl --force-reinstall

rm -f silk2.tools.logger-1.0.2-py3-none-any.whl

# This routine depends on sophon-sail. You can directly install sophon-sail by executing the following commands:

pip3 install dfss --upgrade

python3 -m dfss --install sail

# You need to download the operation configuration file. Execute the following commands:

python3 -m dfss --url=open@sophgo.com:sophon-demo/Qwen2_5_VL/configs.zip

unzip configs.zip

rm configs.zip2.2 Start the test

Parameter Description

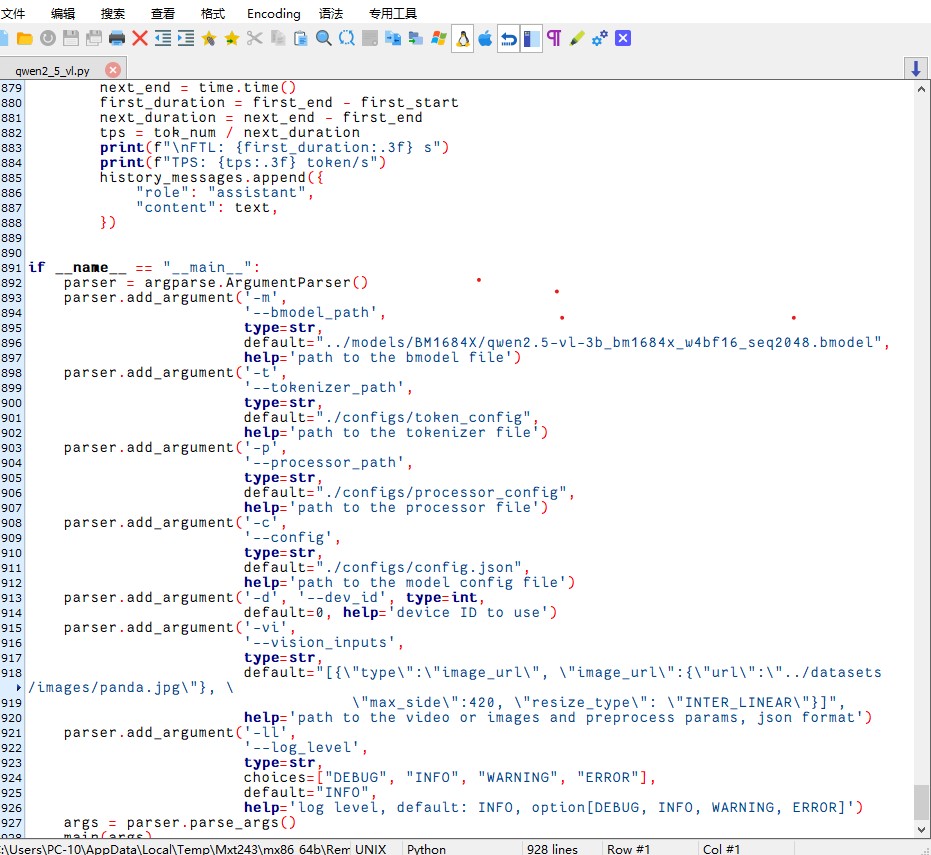

You can test it by modifying the content in qwen2_5_vl.py. The content is as shown in the figure:

The default path of bmodel in line 896 needs to be changed to: ../models/BM1684X/qwen2.5-vl-3b_bm1684x_w4bf16_seq2048.bmodel

How to use

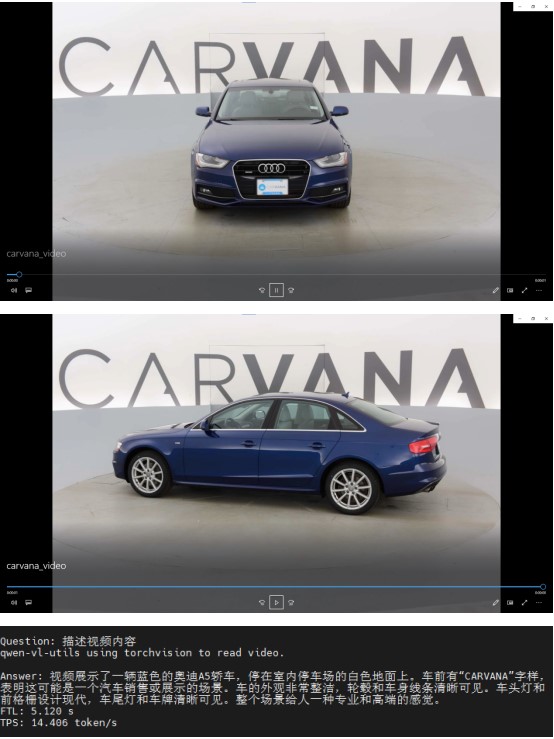

# Video recognition

python3 qwen2_5_vl.py --vision_inputs="[{\"type\":\"video_url\",\"video_url\":{\"url\": \"../datasets/videos/carvana_video.mp4\"},\"resized_height\":420,\"resized_width\":630,\"nframes\":2}]"

# Image recognition

python3 qwen2_5_vl.py --vision_inputs="[{\"type\":\"image_url\",\"image_url\":{\"url\": \"../datasets/images/panda.jpg\"}, \"max_side\":420}]"

# at the same time

python3 qwen2_5_vl.py --vision_inputs="[{\"type\":\"video_url\",\"video_url\":{\"url\": \"../datasets/videos/carvana_video.mp4\"},\"resized_height\":420,\"resized_width\":630,\"nframes\":2},{\"type\":\"image_url\",\"image_url\":{\"url\": \"../datasets/images/panda.jpg\"}, \"max_side\":840}]"

# Pure text conversation

python3 qwen2_5_vl.py --vision_inputs=""Effects

You can add pictures or videos to the folder for testing.